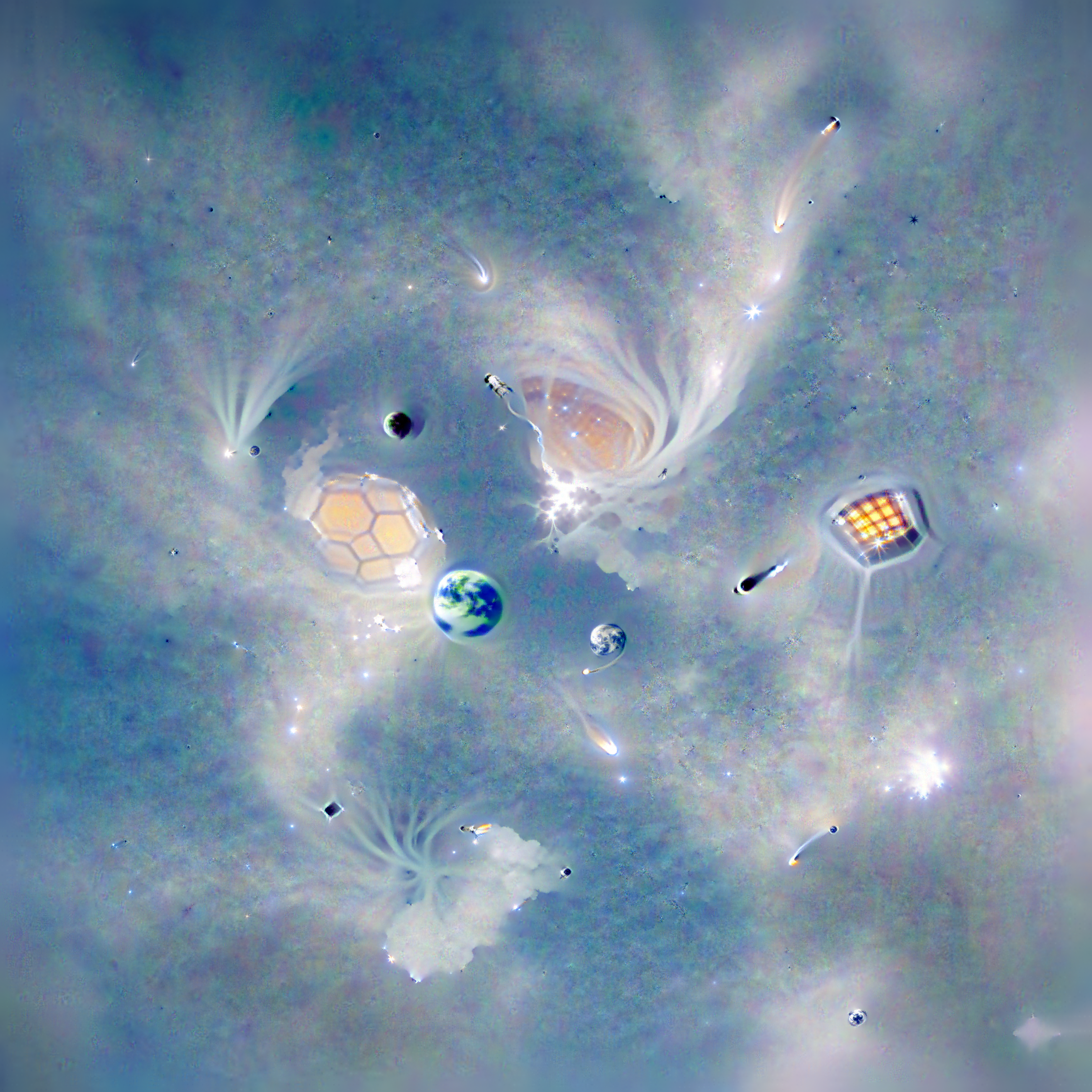

CLIP guided pixel optimizations (Dreams)

CLIP Dream Engine

Semantic Image Optimization Without Diffusion

Overview

What if an AI model could dream?

This project explores a simple but powerful idea:

Instead of training a generative model, what happens if we directly optimize pixels using semantic gradients from CLIP?

CLIP is not a generative model.

It only measures similarity between images and text.

This system flips that role.

CLIP becomes the semantic judge, and the image itself becomes the trainable parameter.

No diffusion.

No GAN.

No dataset training.

Just:

Gradients + Constraints + Multi-scale Optimization.

Code & Experiments

- Notebook: dreams.ipynb

- Script: dreams_script.py

- PDF explanation: dreams.pdf

Run the notebook, set:

- Base image

- Text prompt

- Octaves

- Steps

And observe how meaning emerges from gradients.

Core Idea

Given:

- Base image

I - Text prompt

T

We optimize:

Where the goal is to maximize semantic similarity in CLIP’s embedding space.

The image is directly updated in pixel space using gradient descent.

Key Components

1. CLIP Semantic Guidance

- Text prompt acts as an attractor

- Negative prompt acts as a repellor

- Image embeddings are optimized to move toward text embedding

2. Multi-Scale Cutouts

Instead of feeding the whole image, multiple crops are used:

- Global view

- Mid-scale views

- Local patches

This ensures:

- Global coherence

- Local detail emergence

- Better semantic alignment

3. Masked Total Variation Regularization

Without regularization, optimization produces:

- Noise

- Hallucinated artifacts

- Chaotic pixel explosions

To fix this, a Masked TV Loss is introduced:

- CLIP decides what should appear

- Masked TV decides how it is allowed to look

Edges are preserved.

Noise is suppressed.

Flat regions remain stable.

4. Octave-Based Optimization

The image is optimized in progressive scales:

Low resolution establishes structure.

Higher octaves refine details.

This prevents:

- High-frequency collapse

- Early-stage noise explosion

5. Pixel Clamping

CLIP operates purely mathematically.

It does not understand physical color constraints.

Optimization can push RGB values to unrealistic ranges.

Solution:

- Clamp pixel values to

[0, 1] - Maintain physically valid colors

What This Project Is

- Energy-based generative system

- CLIP-guided semantic hallucination engine

- Research exploration of optimization-driven generation

What This Project Is Not

- Not a diffusion model

- Not a GAN

- Not trained on datasets

- Not an image editor

Technical Stack

- PyTorch

- OpenCLIP

- Multi-scale augmentation

- Custom Masked TV Loss

- Cosine LR scheduling

- Mixed precision (CUDA support)

Conceptual Summary

This project demonstrates that meaningful visual structure can emerge from:

Semantic similarity + Multi-scale views + Regularization.

No sampling.

No training.

Just optimization.